Top AI Frameworks in 2025: How to Choose the Best Fit for Your Project

1. Introduction: Navigating the AI Framework Landscape

The world of artificial intelligence is evolving at breakneck speed. What was cutting-edge last year is now foundational, and new advancements emerge almost daily. This relentless pace means the tools we use to build AI — the AI frameworks — are also constantly innovating. For software developers, AI/ML engineers, tech leads, CTOs, and business decision-makers, understanding this landscape is paramount.

The Ever-Evolving World of AI Development

From sophisticated large language models (LLMs) driving new generative capabilities to intricate computer vision systems powering autonomous vehicles, AI applications are becoming more complex and pervasive across every industry. Developers and businesses alike are grappling with how to harness this power effectively, facing challenges in scalability, efficiency, and ethical deployment. At the heart of building these intelligent systems lies the critical choice of the right AI framework.

Why Choosing the Right Framework Matters More Than Ever

In 2025, selecting an AI framework isn't just a technical decision; it's a strategic one that can profoundly impact your project's trajectory. The right framework can accelerate development cycles, optimize model performance, streamline deployment processes, and ultimately ensure your project's success and ROI. Conversely, a poor or ill-suited choice can lead to significant bottlenecks, increased development costs, limited scalability, and missed market opportunities. Understanding the current landscape of AI tools and meticulously aligning your choice with your specific project needs is crucial for thriving in the competitive world of AI development.

2. Understanding AI Frameworks: The Foundation of Intelligent Systems

What Exactly is an AI Framework?

An AI framework is essentially a comprehensive library or platform that provides a structured set of pre-built tools, libraries, and functions. Its primary purpose is to make developing machine learning (ML) and deep learning (DL) models easier, faster, and more efficient. Think of it as a specialized, high-level toolkit for AI development. Instead of coding every complex mathematical operation, algorithm, or neural network layer from scratch, developers use these frameworks to perform intricate tasks with just a few lines of code, focusing more on model architecture and data.

Key Components and Core Functions

Most AI frameworks come equipped with several core components that underpin their functionality:

- Automatic Differentiation: This is a fundamental capability, particularly critical for training deep learning frameworks. It enables the efficient calculation of gradients, which are essential for how neural networks learn from data.

- Optimizers: These are algorithms that adjust model parameters (weights and biases) during training to minimize errors and improve model performance. Common examples include Adam, SGD, and RMSprop.

- Neural Network Layers: Frameworks provide ready-to-use building blocks (e.g., convolutional layers for image processing, recurrent layers for sequential data, and dense layers) that can be easily stacked and configured to create complex neural network architectures.

- Data Preprocessing Tools: Utilities within frameworks simplify the often complex tasks of data cleaning, transformation, augmentation, and loading, ensuring data is in the right format for model training.

- Model Building APIs: High-level interfaces allow developers to define, train, evaluate, and save their models with relatively simple and intuitive code.

- GPU/TPU Support: Crucially, most modern AI frameworks are optimized to leverage specialized hardware like Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs) for parallel computation, dramatically accelerating the computationally intensive process of deep learning model training.

The Role of Frameworks in Streamlining AI Development

- Faster Prototyping: Quickly test and refine ideas by assembling models from pre-built components, accelerating the experimentation phase.

- Higher Efficiency: Significantly reduce development time and effort by reusing optimized, built-in tools and functions rather than recreating them.

- Scalability: Build robust models that can effectively handle vast datasets and scale efficiently for deployment in production environments.

- Team Collaboration: Provide a common language, set of tools, and established best practices that streamline teamwork and facilitate easier project handover.

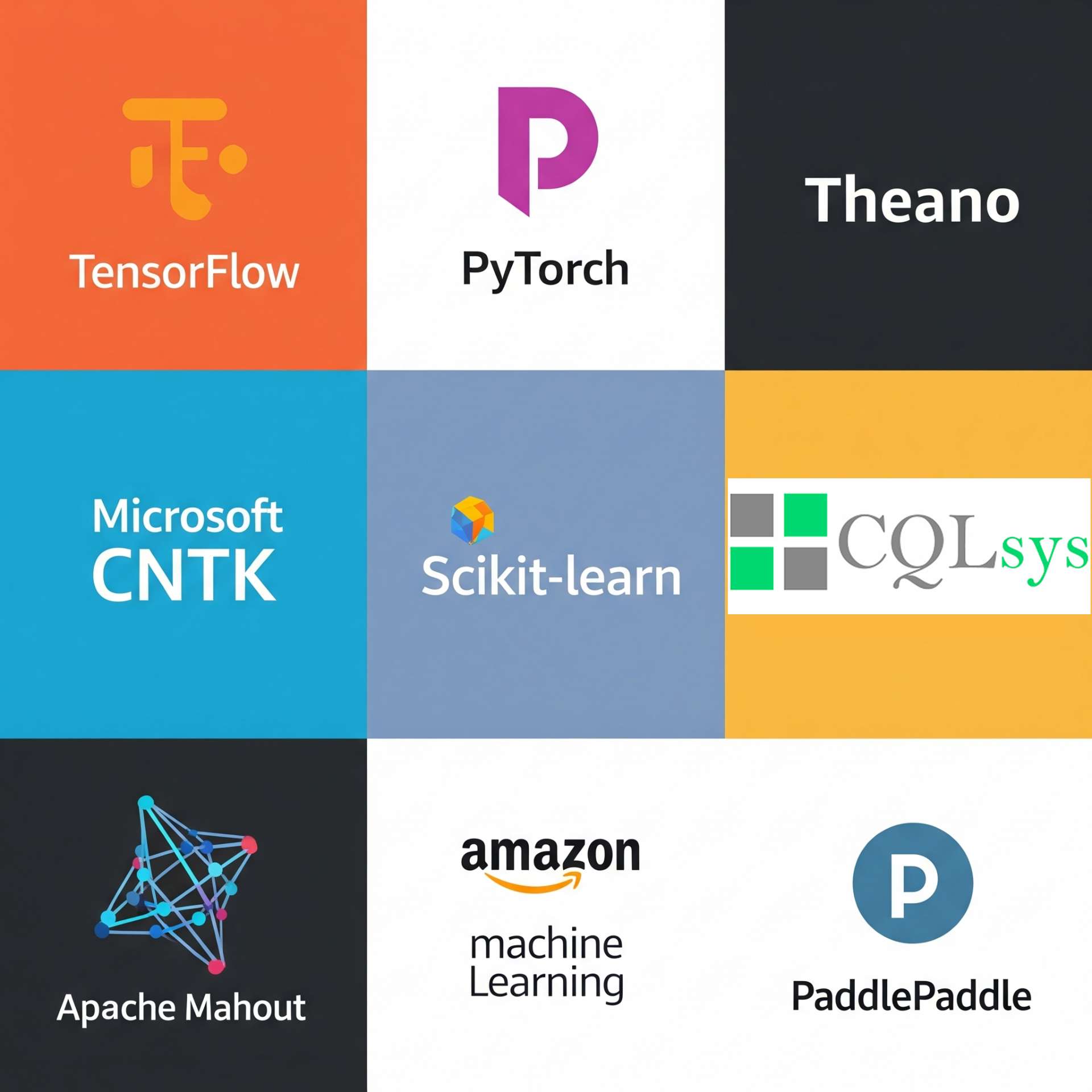

3. The Leading AI Frameworks in 2025: A Deep Dive

TensorFlow: Google's Enduring Giant

TensorFlow, developed by Google, remains one of the most widely adopted deep learning frameworks, especially in large-scale production environments.

Key Features & Strengths:

- Comprehensive Ecosystem: Boasts an extensive ecosystem, including TensorFlow Lite (for mobile and edge devices), TensorFlow.js (for web-based ML), and TensorFlow Extended (TFX) for end-to-end MLOps pipelines.

- Scalable & Production-Ready: Designed from the ground up for massive computational graphs and robust deployment in enterprise-level solutions.

- Great Visuals: TensorBoard offers powerful visualization tools for monitoring training metrics, debugging models, and understanding network architectures.

- Versatile: Highly adaptable for a wide range of ML tasks, from academic research to complex, real-world production applications.

- Ideal Use Cases: Large-scale enterprise AI solutions, complex research projects requiring fine-grained control, production deployment of deep learning models, mobile and web AI applications, and MLOps pipeline automation.

PyTorch: The Research & Flexibility Champion

PyTorch, developed by Meta (formerly Facebook) AI Research, has become the preferred choice in many academic and research communities, rapidly gaining ground in production.

Key Features & Strengths:

- Flexible Debugging: Its dynamic computation graph (known as "define-by-run") makes debugging significantly easier and accelerates experimentation.

- Python-Friendly: Its deep integration with the Python ecosystem and intuitive API makes it feel natural and accessible to Python developers, contributing to a smoother learning curve for many.

- Research-Focused: Widely adopted in academia and research for its flexibility, allowing for rapid prototyping of novel architectures and algorithms.

- Production-Ready: Has significantly matured in production capabilities with tools like PyTorch Lightning for streamlined training and TorchServe for model deployment.

- Ideal Use Cases: Rapid prototyping, advanced AI research, projects requiring highly customized models and complex neural network architectures, and startups focused on quick iteration and experimentation.

JAX: Google's High-Performance Differentiable Programming

JAX, also from Google, is gaining substantial traction for its powerful automatic differentiation and high-performance numerical computation capabilities, particularly in cutting-edge research.

Key Features & Strengths:

- Advanced Autodiff: Offers highly powerful and flexible automatic differentiation, not just for scalars but for vectors, matrices, and even higher-order derivatives.

- XLA Optimized: Leverages Google's Accelerated Linear Algebra (XLA) compiler for extreme performance optimization and efficient execution on GPUs and TPUs.

- Composable Functions: Enables easy composition of functional transformations like grad (for gradients), jit (for just-in-time compilation), and vmap (for automatic vectorization) to create highly optimized and complex computations.

- Research-Centric: Increasingly popular in advanced AI research for exploring novel AI architectures and training massive models.

- Ideal Use Cases: Advanced AI research, developing custom optimizers and complex loss functions, high-performance computing, exploring novel AI architectures, and training deep learning models on TPUs where maximum performance is critical.

Keras (with TensorFlow/JAX/PyTorch backend): Simplicity Meets Power

Keras is a high-level API designed for fast experimentation with deep neural networks. Its strength lies in its user-friendliness and ability to act as an interface for other powerful deep learning frameworks.

Key Features & Strengths:

- Beginner-Friendly: Offers a simple, intuitive, high-level API, making it an excellent entry point for newcomers to deep learning.

- Backend Flexibility: Can run seamlessly on top of TensorFlow, JAX, or PyTorch, allowing developers to leverage the strengths of underlying frameworks while maintaining Keras's ease of use.

- Fast Prototyping: Its straightforward design is ideal for quickly building, training, and testing models.

- Easy Experimentation: Its intuitive design supports rapid development cycles and iterative model refinement.

- Ideal Use Cases: Quick model building and iteration, educational purposes, projects where rapid prototyping is a priority, and developers who prefer a high-level abstraction to focus on model design rather than low-level implementation details.

4. Beyond the Hype: Critical Factors for Choosing Your AI Framework

Project Requirements & Scope

- Backend Flexibility: Can run seamlessly on top of TensorFlow, JAX, or PyTorch, allowing developers to leverage the strengths of underlying frameworks while maintaining Keras's ease of use.

- Type of AI Task: Different frameworks excel in specific domains. Are you working on computer vision (CV), natural language processing (NLP), time series analysis, reinforcement learning, or traditional tabular data?

- Deployment Scale: Where will your model run? On a small edge device, a mobile phone, a web server, or a massive enterprise cloud infrastructure? The framework's support for various deployment targets is crucial.

- Performance Needs: Does your application demand ultra-low latency, high throughput (processing many requests quickly), or efficient memory usage? Benchmarking and framework optimization capabilities become paramount.

Community & Ecosystem Support

- Documentation and Tutorials: Are there clear, comprehensive guides, tutorials, and examples available to help your team get started and troubleshoot issues?

- Active Developer Community & Forums: A strong, vibrant community means more shared knowledge, faster problem-solving, and continuous improvement of the framework.

- Available Pre-trained Models & Libraries: Access to pre-trained models (like those from Hugging Face) and readily available libraries for common tasks can drastically accelerate development time.

- Performance Needs: Does your application demand ultra-low latency, high throughput (processing many requests quickly), or efficient memory usage? Benchmarking and framework optimization capabilities become paramount.

Learning Curve & Team Expertise

- Onboarding: How easily can new team members learn the framework's intricacies and become productive contributors to the AI development effort?

- Existing Skills: Does the framework align well with your team's current expertise in Python, specific mathematical concepts, or other relevant technologies? Leveraging existing knowledge can boost efficiency.

Flexibility & Customization

- Ease of Debugging and Experimentation: A flexible framework allows for easier iteration, understanding of model behavior, and efficient debugging, which is crucial for research and complex AI projects.

- Support for Custom Layers and Models: Can you easily define and integrate custom neural network layers or entirely new model architectures if your AI project requires something unique or cutting-edge?

Integration Capabilities

- Compatibility with Existing Tech Stack: How well does the framework integrate with your current programming languages, databases, cloud providers, and existing software infrastructure? Seamless integration saves development time.

- Deployment Options: Does the framework offer clear and efficient pathways for deploying your trained models to different environments (e.g., mobile apps, web services, cloud APIs, IoT devices)?

Hardware Compatibility

GPU/TPU Support and Optimization: For deep learning frameworks, efficient utilization of specialized hardware like GPUs and TPUs is paramount for reducing training time and cost. Ensure the framework offers robust and optimized support for the hardware you plan to use.

Licensing and Commercial Use Considerations

Open-source vs. Proprietary Licenses: Most leading AI frameworks are open-source (e.g., Apache 2.0, MIT), offering flexibility. However, always review the specific license to ensure it aligns with your commercial use case and intellectual property requirements.

5. Real-World Scenarios: Picking the Right Tool for the Job

Scenario 1: Rapid Prototyping & Academic Research

Best Fit: PyTorch, Keras (with any backend), or JAX. Their dynamic graphs (PyTorch) and high-level APIs (Keras) allow for quick iteration, experimentation, and easier debugging, which are crucial in research settings. JAX is gaining ground here for its power and flexibility in exploring novel architectures.

Scenario 2: Large-Scale Enterprise Deployment & Production

Best Fit: TensorFlow or PyTorch (with production tools like TorchServe/Lightning). TensorFlow's robust ecosystem (TFX, SavedModel format) and emphasis on scalability make it a strong contender. PyTorch's production readiness has also significantly matured, making it a viable choice for large-scale AI development and deployment.

Scenario 3: Developing a Custom NLP/LLM Application

Best Fit: Hugging Face Transformers (running on top of PyTorch or TensorFlow). This ecosystem provides the fastest way to leverage and fine-tune state-of-the-art large language models (LLMs), significantly reducing AI development time and effort. Its vast collection of pre-trained models is a game-changer for AI tools in NLP.

Scenario 4: Building Traditional Machine Learning Models

Best Fit: Scikit-learn. For tasks like classification, regression, clustering, and data preprocessing on tabular data, Scikit-learn remains the industry standard. Its simplicity, efficiency, and comprehensive algorithm library make it the go-to machine learning framework for non-deep learning applications.